Multimodální gramatiky se s výhodou využívají pro rychlé vytvoření integračních a inteligentních schopností pro interaktivní systémy, které podporují současné použití vícenásobných vstupních modalit. Multimodální gramatiky však mohou být nestabilní při neočekávaném nebo chybném vstupu. Zkoumá se proto řada různých postupů pro zvyšování robustnosti multimodální integrace a inteligence.

Robustness of Language Models for Speech Recognition - Abstract

Multimodal grammars are effectively exploited for quick creation of integration and understanding capabilities for interactive systems supporting simultaneous use of multiple input modalities. However, multimodal grammars can be fragile with respect to unexpected or erroneous input. A range of different techniques for improving the robustness of multimodal integration and understanding is explored.

Keywords: Multimodal grammar; language model; robustness

Introduction

This article summarizes the basic ideas of extensive publication [13]. The aim of this paper is to perform the basic information about robustness of language models for speech recognition in very concise form.

The ongoing convergence of the Web with telephony, driven by technologies such as voice over IP, broadband Internet access, high-speed mobile data networks, handheld computers and smartphones, enables widespread deployment of multimodal interfaces which combine graphical user interfaces with natural modalities such as speech and pen. The critical advantage of multimodal interfaces is that they allow user input and system output to be expressed in the mode or modes to which they are best suited, given the task at hand, user preferences, and the physical and social environment of the interaction.

In order to support effective multimodal interfaces, natural language processing techniques, which have typically operated over linear sequences of speech or text, need to be extended in order to support integration and understanding of multimodal language distributed over multiple different input modes. Multimodal grammars provide an expressive mechanism for quickly creating language processing capabilities for multimodal interfaces supporting input modes such as speech and gesture. They support composite multimodal inputs by aligning speech input (words) and gesture input (represented as sequences of gesture symbols) while expressing the relation between the speech and gesture input and their combined semantic representation. Such grammars can be compiled into finite-state transducers, enabling effective processing of lattice input from speech and gesture recognition and mutual compensation for errors and ambiguities.

Techniques for robustness improving

The problem of speech recognition can be briefly represented as a search for the most likely word sequence through the network created by the composition of a language of acoustic observations, an acoustic model which is a transduction from acoustic observations to phone sequences, a pronunciation model which is a transduction from phone sequences to word sequences, and a language model acceptor [1]. The language model acceptor encodes the (weighted) word sequences permitted in an application.

Typically, an acceptor is built using either a hand-crafted grammar or using a statistical language model derived from a corpus of sentences from the application domain. Although a grammar could be written so as to be easily portable across applications, it suffers from being too prescriptive and has no metric for the relative likelihood of users’ utterances. In contrast, in the data-driven approach a weighted grammar is automatically induced from a corpus and the weights can be interpreted as a measure of the relative likelihoods of users’ utterances. However, the reliance on a domain-specific corpus is one of the significant bottlenecks of data-driven approaches, because collecting a corpus specific to a domain is an expensive and time-consuming task, especially for multimodal applications.

Language Model Using In-Domain Corpus

A domain corpus from the data collection was used to build a class-based trigram language model using the 709 multimodal and speech-only utterances as the corpus. Classes were used when building the trigram language model. The trigram language model is represented as a weighted finite-state acceptor [2] for speech recognition purposes. The performance of this model serves as the point of reference to compare the performance of language models trained on derived corpora.

Grammar as Language Model

The multimodal context-free grammar (CFG) encodes the repertoire of language and gesture commands allowed by the system and their combined interpretations. The CFG can be approximated by a finite state machine (FSM) with arcs labeled with language, gesture, and meaning symbols, using well-known compilation techniques [3]. Selecting the language symbol of each arc (projecting the FSM on the speech component) results in an FSM that can be used as the language model acceptor for speech recognition. Note that the resulting language model acceptor is unweighted if the grammar is unweighted too and suffers from not being robust to language variations in users’ input. However, due to the tight coupling of the grammars used for recognition and interpretation, every recognized string can be assigned a meaning representation (though it may not necessarily be the intended interpretation).

Grammar-Based n-gram Language Model

A hand-crafted grammar typically suffers from the problem of being too restrictive and inadequate to cover the variations and extra-grammaticality of users’ input. In contrast, an n-gram language model derives its robustness by permitting all strings over an alphabet, albeit with different likelihoods. In an attempt to provide robustness to the grammar-based model, we created a corpus of k sentences by randomly sampling the set of paths of the grammar and built a class-based n-gram language model using this corpus. Although this corpus does not represent the true distribution of sentences in a domain, we are able to derive some of the benefits of n-gram language modeling techniques [4], [5].

Combining Grammar and Corpus

A straightforward extension of the idea of sampling the grammar in order to create a corpus is to select those sentences out of the grammar which make the resulting corpus “similar” to the corpus collected in the pilot studies. In order to create this corpus, we choose the k most likely sentences as determined by a language model built using the collected corpus. A mixture model with mixture weight is built by interpolating the model trained on the corpus of extracted sentences and the model trained on the collected corpus.

Class-Based Out-of-Domain Language Model

An alternative to using in-domain corpora for building language models is to “migrate” a corpus of a different domain to our domain. The process of migrating a corpus involves suitably generalizing the corpus to remove information that is specific only to the other domain and instantiating the generalized corpus to our domain. Although there are a number of ways of generalizing the out-of-domain corpus, the generalization we have investigated involved identifying linguistic units, such as noun and verb chunks, in the out-of-domain corpus and treating them as classes. These classes are then instantiated to the corresponding linguistic units from a domain. The identification of the linguistic units in the out-of-domain corpus is done automatically using a supertagger [6]. A corpus collected in the context of a software help-desk application is used as an example out-of-domain corpus. In cases where the out-of-domain corpus is closely related to the domain at hand, a more semantically driven generalization might be more suitable.

Adapting the Switchboard Language Model

The performance of a large-vocabulary conversational speech recognition system was investigated when applied to a specific domain. The Switchboard corpus was used as an example of a large-vocabulary conversational speech corpus. A trigram model was built using the 5.4-million-word corpus and investigated the effect of adapting the Switchboard language model given k in-domain untranscribed speech utterances. The adaptation is done by first recognizing the in-domain speech utterances and then building a language model from the corpus of recognized text. This bootstrapping mechanism can be used to derive a domain specific corpus and language model without any transcriptions [7], [8].

Adapting a Wide-Coverage Grammar

There have been a number of computational implementations of wide-coverage, domain-independent, syntactic grammars for English in various grammar formalisms [9], [10], [11]. Here, can be found description of a method that exploits one such grammar implementation in the Lexicalized Tree-Adjoining Grammar (LTAG) formalism, for deriving domain-specific corpora. An LTAG consists of a set of elementary trees (supertags) [6] each associated with a lexical item (the head). Supertags encode predicate–argument relations of the head and the linear order of its arguments with respect to the head. A supertag can be represented as a finite-state machine with the head and its arguments as arc labels. The set of sentences generated by an LTAG can be obtained by combining supertags using substitution and adjunction operations. In related work [12], it has been shown that for a restricted version of LTAG, the combinations of a set of supertags can be represented as an FSM. This FSM compactly encodes the set of sentences generated by an LTAG grammar. It is composed of two transducers, a lexical FST, and a syntactic FSM.

The lexical FST transduces input words to supertags. It is assumed that as input to the construction of the lexical machine we have a list of words with their parts-of-speech. Once we have determined for each word the set of supertags they should be associated with, we create a disjunctive finite-state transducer (FST) for all words which transduces the words to their supertags.

For the syntactic FSM, we take the union of all the FSMs for each supertag which corresponds to an initial tree (i.e., a tree which need not be adjoined). We then perform a series of iterative replacements: In each iteration, each arc labeled by a supertag is replaced by its lexicalized version of that supertag’s FSM. Of course,, there are many more replacements in each iteration than in the previous one. Based on the syntactic complexity in the domain (such as number of modifiers, clausal embedding, and prepositional phrases), we use five rounds of iteration. The number of iterations restricts the syntactic complexity but not the length of the input. This construction is in many ways similar to constructions proposed for CFGs [3]. One difference is that, because everything starts from TAG, recursion is already factored, and there is no need to find cycles in the rules of the grammar.

A domain-specific corpus is derived by constructing a lexicon consisting of pairings of words with their supertags that are relevant to this domain. Then the grammar is compiled to build an FSM of all sentences up to a given depth of recursion. This FSM is sampled and a language model is built as discussed in Section 2.3. Given untranscribed utterances from a specific domain, the language model can also be adapted as discussed in Section 2.6.

Speech Recognition Experiments

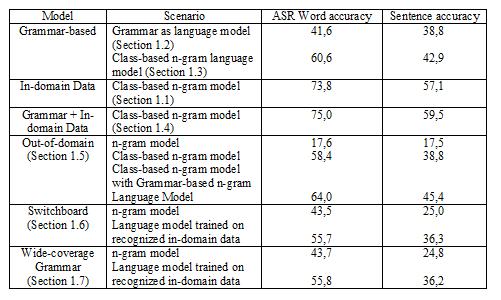

A set of experiments was described to evaluate the performance of the language model in a multimodal system. Word accuracy and string accuracy is used for evaluating ASR (Automatic Speech Recognition) output. All results presented in this section are based on 10-fold cross validation experiments run on the 709 spoken and multimodal exchanges. Table 1 presents the performance results for ASR word and sentence accuracy using language models trained on the collected in-domain corpus as well as on corpora derived using the different methods discussed in Sections 2.2–2.7.

It is immediately apparent that the hand-crafted grammar as a language model performs poorly and a language model trained on the collected domain-specific corpus performs significantly better than models trained on derived data. However, it is encouraging to note that a model trained on a derived corpus (obtained from combining the migrated out-of-domain corpus and a corpus created by sampling the in-domain grammar) is within 10% word accuracy as compared to the model trained on the collected corpus. There are several other noteworthy observations from these experiments.

The performance of the language model trained on data sampled from the grammar is dramatically better as compared to the performance of the hand-crafted grammar. This technique provides a promising direction for authoring portable grammars that can be sampled subsequently to build robust language models when no in-domain corpora are available. Furthermore, combining grammar and in-domain data, as described in Section 5.4, outperforms all other models significantly.

Table 1 – Performance results for ASR word and sentence accuracy using models trained on data derived from different methods of bootstrapping domain-specific data [13].

For the experiment on the migration of an out-of-domain corpus, a corpus from a software help-desk application was used. Table 1 shows that the migration of data using linguistic units as described in Section 2.5 significantly outperforms a model trained only on the out-of-domain corpus. Also, combining the grammar sampled corpus with the migrated corpus provides further improvement.

The performance of the Switchboard model on the domain is presented in the fifth row of Table 1. A trigram model is built using a 5.4-million-word Switchboard corpus and investigated the effect of adapting the resulting language model on in-domain untranscribed speech utterances. The adaptation is done by first running the recognizer on the training partition of the in-domain speech utterances and then building a language model from the recognized text. The performance can be significantly improved using the adaptation technique.

The last row of Table 1 shows the results of using the specific lexicon to generate a corpus using a wide-coverage grammar, training a language model, and adapting the resulting model using in-domain untranscribed speech utterances as was done for the Switchboard model. The class-based trigram model was built using 500,000 randomly sampled paths from the network constructed by the procedure described in Section 1.7. It is interesting to note that the performance is very similar to the Switchboard model given that the wide-coverage grammar is not designed for conversational speech unlike models derived from Switchboard data. The data from the domain has some elements of conversational-style speech which the Switchboard model models well, but it also has syntactic constructions that are adequately modeled by the wide coverage grammar.

Conclusion

A range of techniques to build language models for speech recognition which are applicable at different development phases of an application were presented. Although the utility of in-domain data cannot be obviated, it was shown that there are ways to approximate this data with a combination of grammar and out-of domain data. These techniques are particularly useful in the initial phases of application development when there is very little in-domain data. The technique of authoring a domain-specific grammar that is sampled for n-gram model building presents a good trade-off between time-to-create and the robustness of the resulting language model. This method can be extended by incorporating suitably generalized out-of-domain data, in order to approximate the distribution of n-grams in the in-domain data. If time to develop is of utmost importance, it was shown that using a large out-of-domain corpus (Switchboard) or a wide-coverage domain-independent grammar can yield a reasonable language model.

References

[1] Pereira, F. C. N. and M. D. Riley. Speech recognition by composition of weighted finite automata. In E. Roche and Y. Schabes, editors, Finite State Devices for Natural Language Processing. MIT Press, Cambridge, MA, USA, pages 431–456, 1997

[2] Allauzen, C., M. Mohri, and B. Roark. Generalized algorithms for constructing statistical language models. In Proceedings of the Association for Computational Linguistics, pages 40–47, Sapporo, 2003

[3] Nederhof, M. J. Regular approximations of CFLs: A grammatical view. In Proceedings of the International Workshop on Parsing Technology, pages 159–170, Boston, MA, 1997

[4] Galescu, L., E. K. Ringger, and J. F. Allen. Rapid language model development for new task domains. In Proceedings of the ELRA First International Conference on Language Resources and Evaluation (LREC), pages 807–812, Granada, 1998

[5] Wang, Y. and A. Acero. Combination of CFG and n-gram modeling in semantic grammar learning. In Proceedings of the Eurospeech Conference, pages 2809–2812, Geneva, 2003

[6] Bangalore, S. and A. K. Joshi. Supertagging: An approach to almost parsing. Computational Linguistics, 25(2):237–265, 1999

[7] Bacchiani, M. and B. Roark. Unsupervised language model adaptation. In Proceedings of the International Conference on Acoustics, Speech, and Signal Processing, pages 224–227, Hong Kong, 2003

[8] Souvignier, B. and A. Kellner. Online adaptation for language models in spoken dialogue systems. In International Conference on Spoken Language Processing, pages 2323–2326, Sydney, 1998

[9] Flickinger, D., A. Copestake, and I. Sag. HPSG analysis of English. In W. Wahlster, editor, Verbmobil: Foundations of Speech-to-Speech Translation. Springer–Verlag, Berlin, pages 254–263, 2000

[10] Clark, S. and J. Hockenmaier. Evaluating a wide-coverage CCG parser. In Proceedings of the LREC 2002, Beyond Parseval Workshop, pages 60–66, Las Palmas, 2002

[11] XTAG. A lexicalized tree-adjoining grammar for English. Technical report, University of Pennsylvania. Available at www.cis.upenn.edu/~xtag/gramrelease.html, 2001

[12] Rambow, O., S. Bangalore, T. Butt, A. Nasr, and R. Sproat. Creating a finite-state parser with application semantics. In Proceedings of the International Conference on Computational Linguistics (COLING 2002), pages 1–5, Taipei, 2002

[13] Bangalore S., Johnston M. Robust Understanding in Multimodal Interfaces. In Computational Linguistics, vol.35, number 3, pages 345-380, 2009